Messaging

Pulsar is built on the publish-subscribe pattern (often abbreviated to pub-sub). In this pattern, producers publish messages to topics; consumers subscribe to those topics, process incoming messages, and send acknowledgements to the broker when processing is finished.

When a subscription is created, Pulsar retains all messages, even if the consumer is disconnected. The retained messages are discarded only when a consumer acknowledges that all these messages are processed successfully.

If the consumption of a message fails and you want this message to be consumed again, then you can enable the automatic redelivery of this message by sending a negative acknowledgement to the broker or enabling the acknowledgement timeout for unacknowledged messages.

Messages

Messages are the basic "unit" of Pulsar. The following table lists the components of messages.

| Component | Description |

|---|---|

| Value / data payload | The data carried by the message. All Pulsar messages contain raw bytes, although message data can also conform to data schemas. |

| Key | Messages are optionally tagged with keys, which is useful for things like topic compaction. |

| Properties | An optional key/value map of user-defined properties. |

| Producer name | The name of the producer who produces the message. If you do not specify a producer name, the default name is used. |

| Sequence ID | Each Pulsar message belongs to an ordered sequence on its topic. The sequence ID of the message is its order in that sequence. |

| Publish time | The timestamp of when the message is published. The timestamp is automatically applied by the producer. |

| Event time | An optional timestamp attached to a message by applications. For example, applications attach a timestamp on when the message is processed. If nothing is set to event time, the value is 0. |

| TypedMessageBuilder | It is used to construct a message. You can set message properties such as the message key, message value with TypedMessageBuilder. When you set TypedMessageBuilder, set the key as a string. If you set the key as other types, for example, an AVRO object, the key is sent as bytes, and it is difficult to get the AVRO object back on the consumer. |

The default size of a message is 5 MB. You can configure the max size of a message with the following configurations.

-

In the

broker.conffile.

# The max size of a message (in bytes).

maxMessageSize=5242880 -

In the

bookkeeper.conffile.

# The max size of the netty frame (in bytes). Any messages received larger than this value are rejected. The default value is 5 MB.

nettyMaxFrameSizeBytes=5253120

For more information on Pulsar messages, see Pulsar binary protocol.

Producers

A producer is a process that attaches to a topic and publishes messages to a Pulsar broker. The Pulsar broker processes the messages.

Send modes

Producers send messages to brokers synchronously (sync) or asynchronously (async).

| Mode | Description |

|---|---|

| Sync send | The producer waits for an acknowledgement from the broker after sending every message. If the acknowledgment is not received, the producer treats the sending operation as a failure. |

| Async send | The producer puts a message in a blocking queue and returns immediately. The client library sends the message to the broker in the background. If the queue is full (you can configure the maximum size), the producer is blocked or fails immediately when calling the API, depending on arguments passed to the producer. |

Access mode

You can have different types of access modes on topics for producers.

| Access mode | Description |

|---|---|

Shared | Multiple producers can publish on a topic. This is the default setting. |

Exclusive | Only one producer can publish on a topic. If there is already a producer connected, other producers trying to publish on this topic get errors immediately. The "old" producer is evicted and a "new" producer is selected to be the next exclusive producer if the "old" producer experiences a network partition with the broker. |

WaitForExclusive | If there is already a producer connected, the producer creation is pending (rather than timing out) until the producer gets the Exclusive access.The producer that succeeds in becoming the exclusive one is treated as the leader. Consequently, if you want to implement the leader election scheme for your application, you can use this access mode. |

Once an application creates a producer with Exclusive or WaitForExclusive access mode successfully, the instance of this application is guaranteed to be the only writer to the topic. Any other producers trying to produce messages on this topic will either get errors immediately or have to wait until they get the Exclusive access.

For more information, see PIP 68: Exclusive Producer.

You can set producer access mode through Java Client API. For more information, see ProducerAccessMode in ProducerBuilder.java file.

Compression

You can compress messages published by producers during transportation. Pulsar currently supports the following types of compression:

Batching

When batching is enabled, the producer accumulates and sends a batch of messages in a single request. The batch size is defined by the maximum number of messages and the maximum publish latency. Therefore, the backlog size represents the total number of batches instead of the total number of messages.

In Pulsar, batches are tracked and stored as single units rather than as individual messages. Consumer unbundles a batch into individual messages. However, scheduled messages (configured through the deliverAt or the deliverAfter parameter) are always sent as individual messages even batching is enabled.

In general, a batch is acknowledged when all of its messages are acknowledged by a consumer. It means that when not all batch messages are acknowledged, then unexpected failures, negative acknowledgements, or acknowledgement timeouts can result in a redelivery of all messages in this batch.

To avoid redelivering acknowledged messages in a batch to the consumer, Pulsar introduces batch index acknowledgement since Pulsar 2.6.0. When batch index acknowledgement is enabled, the consumer filters out the batch index that has been acknowledged and sends the batch index acknowledgement request to the broker. The broker maintains the batch index acknowledgement status and tracks the acknowledgement status of each batch index to avoid dispatching acknowledged messages to the consumer. The batch is deleted when all indices of the messages in it are acknowledged.

By default, batch index acknowledgement is disabled (acknowledgmentAtBatchIndexLevelEnabled=false). You can enable batch index acknowledgement by setting the acknowledgmentAtBatchIndexLevelEnabled parameter to true at the broker side. Enabling batch index acknowledgement results in more memory overheads.

Chunking

- Chunking is only available for persistent topics.

- Chunking is only available for the exclusive and failover subscription types.

- Chunking cannot be enabled simultaneously with batching. Before enabling chunking, you need to disable batching.

When chunking is enabled (chunkingEnabled=true), if the message size is greater than the allowed maximum publish-payload size, the producer splits the original message into chunked messages and publishes them with chunked metadata to the broker separately and in order. At the broker side, the chunked messages are stored in the managed-ledger in the same way as that of ordinary messages. The only difference is that the consumer needs to buffer the chunked messages and combines them into the real message when all chunked messages have been collected. The chunked messages in the managed-ledger can be interwoven with ordinary messages. If producer fails to publish all the chunks of a message, the consumer can expire incomplete chunks if consumer fail to receive all chunks in expire time. By default, the expire time is set to one minute.

The consumer consumes the chunked messages and buffers them until the consumer receives all the chunks of a message. And then the consumer stitches chunked messages together and places them into the receiver-queue. Clients consume messages from the receiver-queue. Once the consumer consumes the entire large message and acknowledges it, the consumer internally sends acknowledgement of all the chunk messages associated to that large message. You can set the maxPendingChunkedMessage parameter on the consumer. When the threshold is reached, the consumer drops the unchunked messages by silently acknowledging them or asking the broker to redeliver them later by marking them unacknowledged.

The broker does not require any changes to support chunking for non-shared subscription. The broker only uses chunkedMessageRate to record chunked message rate on the topic.

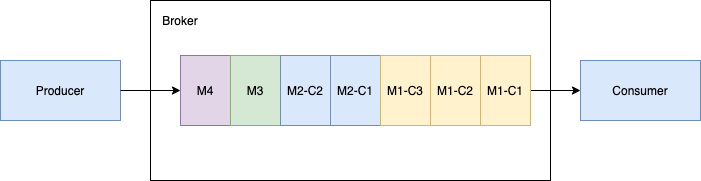

Handle chunked messages with one producer and one ordered consumer

As shown in the following figure, when a topic has one producer which publishes large message payload in chunked messages along with regular non-chunked messages. The producer publishes message M1 in three chunks M1-C1, M1-C2 and M1-C3. The broker stores all the three chunked messages in the managed-ledger and dispatches to the ordered (exclusive/failover) consumer in the same order. The consumer buffers all the chunked messages in memory until it receives all the chunked messages, combines them into one message and then hands over the original message M1 to the client.

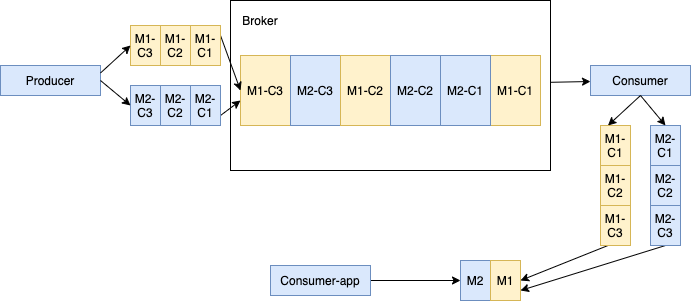

Handle chunked messages with multiple producers and one ordered consumer

When multiple publishers publish chunked messages into a single topic, the broker stores all the chunked messages coming from different publishers in the same managed-ledger. As shown below, Producer 1 publishes message M1 in three chunks M1-C1, M1-C2 and M1-C3. Producer 2 publishes message M2 in three chunks M2-C1, M2-C2 and M2-C3. All chunked messages of the specific message are still in order but might not be consecutive in the managed-ledger. This brings some memory pressure to the consumer because the consumer keeps separate buffer for each large message to aggregate all chunks of the large message and combine them into one message.

Consumers

A consumer is a process that attaches to a topic via a subscription and then receives messages.

A consumer sends a flow permit request to a broker to get messages. There is a queue at the consumer side to receive messages pushed from the broker. You can configure the queue size with the receiverQueueSize parameter. The default size is 1000). Each time consumer.receive() is called, a message is dequeued from the buffer.

Receive modes

Messages are received from brokers either synchronously (sync) or asynchronously (async).

| Mode | Description |

|---|---|

| Sync receive | A sync receive is blocked until a message is available. |

| Async receive | An async receive returns immediately with a future value—for example, a CompletableFuture in Java—that completes once a new message is available. |

Listeners

Client libraries provide listener implementation for consumers. For example, the Java client provides a MesssageListener interface. In this interface, the received method is called whenever a new message is received.

Acknowledgement

The consumer sends an acknowledgement request to the broker after it consumes a message successfully. Then, this consumed message will be permanently stored, and be deleted only after all the subscriptions have acknowledged it. If you want to store the messages that have been acknowledged by a consumer, you need to configure the message retention policy.

For batch messages, you can enable batch index acknowledgement to avoid dispatching acknowledged messages to the consumer. For details about batch index acknowledgement, see batching.

Messages can be acknowledged in one of the following two ways:

- Being acknowledged individually. With individual acknowledgement, the consumer acknowledges each message and sends an acknowledgement request to the broker.

- Being acknowledged cumulatively. With cumulative acknowledgement, the consumer only acknowledges the last message it received. All messages in the stream up to (and including) the provided message are not redelivered to that consumer.

If you want to acknowledge messages individually, you can use the following API.

consumer.acknowledge(msg);

If you want to acknowledge messages cumulatively, you can use the following API.

consumer.acknowledgeCumulative(msg);

Cumulative acknowledgement cannot be used in the shared subscription mode, because the shared subscription mode involves multiple consumers who have access to the same subscription. In the shared subscription mode, messages are acknowledged individually.

Negative acknowledgement

When a consumer fails to consume a message and intends to consume it again, this consumer should send a negative acknowledgement to the broker. Then, the broker will redeliver this message to the consumer.

Messages are negatively acknowledged individually or cumulatively, depending on the consumption subscription mode.

In the exclusive and failover subscription modes, consumers only negatively acknowledge the last message they receive.

In the shared and Key_Shared subscription modes, consumers can negatively acknowledge messages individually.

Be aware that negative acknowledgments on ordered subscription types, such as Exclusive, Failover and Key_Shared, might cause failed messages being sent to consumers out of the original order.

If you want to acknowledge messages negatively, you can use the following API.

//With calling this api, messages are negatively acknowledged

consumer.negativeAcknowledge(msg);

If batching is enabled, all messages in one batch are redelivered to the consumer.

Acknowledgement timeout

If a message is not consumed successfully, and you want the broker to redeliver this message automatically, then you can enable automatic redelivery mechanism for unacknowledged messages. With automatic redelivery enabled, the client tracks the unacknowledged messages within the entire acktimeout time range, and sends a redeliver unacknowledged messages request to the broker automatically when the acknowledgement timeout is specified.

- If batching is enabled, all messages in one batch are redelivered to the consumer.

- The negative acknowledgement is preferable over the acknowledgement timeout, since negative acknowledgement controls the redelivery of individual messages more precisely and avoids invalid redeliveries when the message processing time exceeds the acknowledgement timeout.

Dead letter topic

Dead letter topic enables you to consume new messages when some messages cannot be consumed successfully by a consumer. In this mechanism, messages that are failed to be consumed are stored in a separate topic, which is called dead letter topic. You can decide how to handle messages in the dead letter topic.

The following example shows how to enable dead letter topic in a Java client using the default dead letter topic:

Consumer<byte[]> consumer = pulsarClient.newConsumer(Schema.BYTES)

.topic(topic)

.subscriptionName("my-subscription")

.subscriptionType(SubscriptionType.Shared)

.deadLetterPolicy(DeadLetterPolicy.builder()

.maxRedeliverCount(maxRedeliveryCount)

.build())

.subscribe();

The default dead letter topic uses this format:

<topicname>-<subscriptionname>-DLQ

- For Pulsar 2.6.x and 2.7.x, the default dead letter topic uses the format of

<subscriptionname>-DLQ. If you upgrade from 2.6.x~2.7.x to 2.8.x or later, you need to delete historical dead letter topics and retry letter partitioned topics. Otherwise, Pulsar continues to use original topics, which are formatted with<subscriptionname>-DLQ. - It is not recommended to use

<subscriptionname>-DLQbecause if multiple topics under the same namespace have the same subscription, then dead message topic names for multiple topics might be the same, which will result in mutual consumptions.

If you want to specify the name of the dead letter topic, use this Java client example:

Consumer<byte[]> consumer = pulsarClient.newConsumer(Schema.BYTES)

.topic(topic)

.subscriptionName("my-subscription")

.subscriptionType(SubscriptionType.Shared)

.deadLetterPolicy(DeadLetterPolicy.builder()

.maxRedeliverCount(maxRedeliveryCount)

.deadLetterTopic("your-topic-name")

.build())

.subscribe();

Dead letter topic depends on message redelivery. Messages are redelivered either due to acknowledgement timeout or negative acknowledgement. If you are going to use negative acknowledgement on a message, make sure it is negatively acknowledged before the acknowledgement timeout.

Currently, dead letter topic is enabled in the Shared and Key_Shared subscription modes.

Retry letter topic

For many online business systems, a message is re-consumed due to exception occurs in the business logic processing. To configure the delay time for re-consuming the failed messages, you can configure the producer to send messages to both the business topic and the retry letter topic, and enable automatic retry on the consumer. When automatic retry is enabled on the consumer, a message is stored in the retry letter topic if the messages are not consumed, and therefore the consumer automatically consumes the failed messages from the retry letter topic after a specified delay time.

By default, automatic retry is disabled. You can set enableRetry to true to enable automatic retry on the consumer.

This example shows how to consume messages from a retry letter topic.

Consumer<byte[]> consumer = pulsarClient.newConsumer(Schema.BYTES)

.topic(topic)

.subscriptionName("my-subscription")

.subscriptionType(SubscriptionType.Shared)

.enableRetry(true)

.receiverQueueSize(100)

.deadLetterPolicy(DeadLetterPolicy.builder()

.maxRedeliverCount(maxRedeliveryCount)

.retryLetterTopic("persistent://my-property/my-ns/my-subscription-custom-Retry")

.build())

.subscriptionInitialPosition(SubscriptionInitialPosition.Earliest)

.subscribe();

If you want to put messages into a retrial queue, you can use the following API.

consumer.reconsumeLater(msg,3,TimeUnit.SECONDS);

Topics

As in other pub-sub systems, topics in Pulsar are named channels for transmitting messages from producers to consumers. Topic names are URLs that have a well-defined structure:

{persistent|non-persistent}://tenant/namespace/topic

| Topic name component | Description |

|---|---|

persistent / non-persistent | This identifies the type of topic. Pulsar supports two kind of topics: persistent and non-persistent. The default is persistent, so if you do not specify a type, the topic is persistent. With persistent topics, all messages are durably persisted on disks (if the broker is not standalone, messages are durably persisted on multiple disks), whereas data for non-persistent topics is not persisted to storage disks. |

tenant | The topic tenant within the instance. Tenants are essential to multi-tenancy in Pulsar, and spread across clusters. |

namespace | The administrative unit of the topic, which acts as a grouping mechanism for related topics. Most topic configuration is performed at the namespace level. Each tenant has one or multiple namespaces. |

topic | The final part of the name. Topic names have no special meaning in a Pulsar instance. |

No need to explicitly create new topics You do not need to explicitly create topics in Pulsar. If a client attempts to write or receive messages to/from a topic that does not yet exist, Pulsar creates that topic under the namespace provided in the topic name automatically. If no tenant or namespace is specified when a client creates a topic, the topic is created in the default tenant and namespace. You can also create a topic in a specified tenant and namespace, such as

persistent://my-tenant/my-namespace/my-topic.persistent://my-tenant/my-namespace/my-topicmeans themy-topictopic is created in themy-namespacenamespace of themy-tenanttenant.

Namespaces

A namespace is a logical nomenclature within a tenant. A tenant creates multiple namespaces via the admin API. For instance, a tenant with different applications can create a separate namespace for each application. A namespace allows the application to create and manage a hierarchy of topics. The topic my-tenant/app1 is a namespace for the application app1 for my-tenant. You can create any number of topics under the namespace.

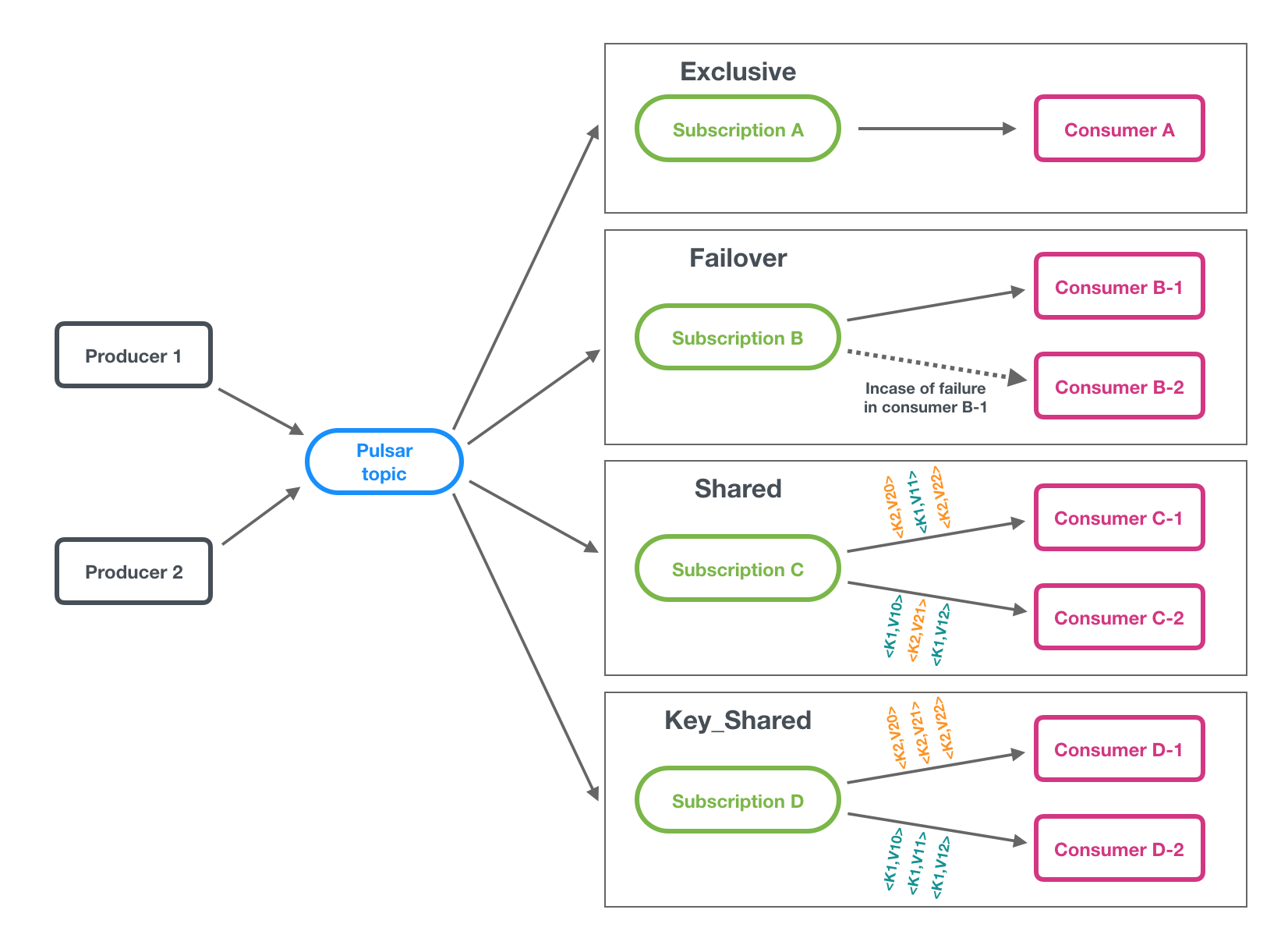

Subscriptions

A subscription is a named configuration rule that determines how messages are delivered to consumers. Four subscription modes are available in Pulsar: exclusive, shared, failover, and key_shared. These modes are illustrated in the figure below.

Pub-Sub or Queuing In Pulsar, you can use different subscriptions flexibly.

- If you want to achieve traditional "fan-out pub-sub messaging" among consumers, specify a unique subscription name for each consumer. It is exclusive subscription mode.

- If you want to achieve "message queuing" among consumers, share the same subscription name among multiple consumers(shared, failover, key_shared).

- If you want to achieve both effects simultaneously, combine exclusive subscription mode with other subscription modes for consumers.

Consumerless Subscriptions and Their Corresponding Modes

When a subscription has no consumers, its subscription mode is undefined. A subscription's mode is defined when a consumer connects to the subscription, and the mode can be changed by restarting all consumers with a different configuration.

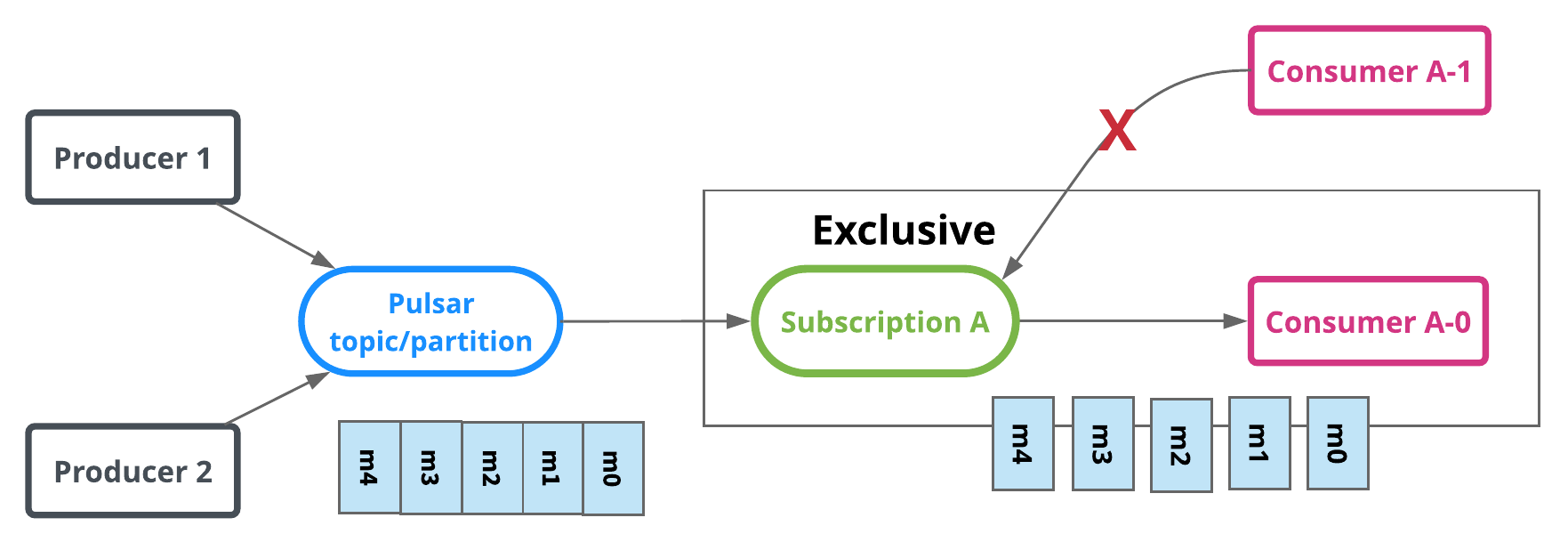

Exclusive

In exclusive mode, only a single consumer is allowed to attach to the subscription. If multiple consumers subscribe to a topic using the same subscription, an error occurs.

In the diagram below, only Consumer A-0 is allowed to consume messages.

Exclusive mode is the default subscription mode.

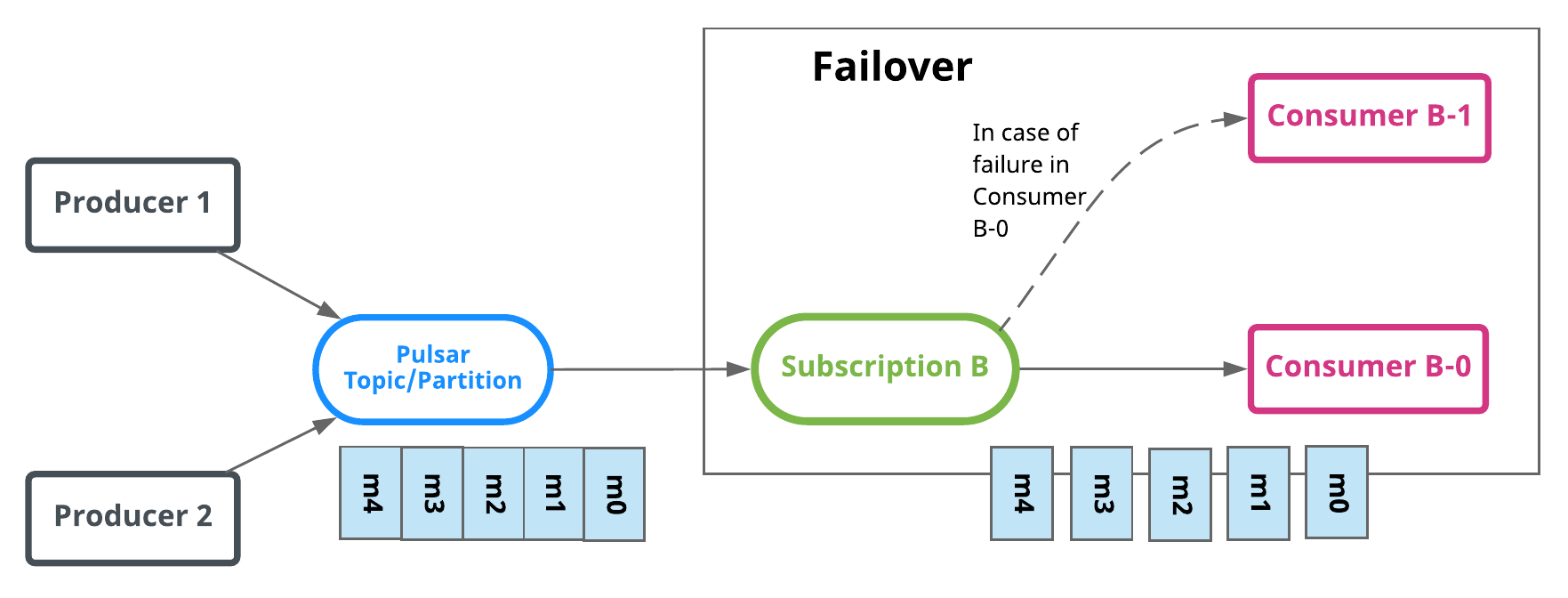

Failover

In failover mode, multiple consumers can attach to the same subscription. A master consumer is picked for non-partitioned topic or each partition of partitioned topic and receives messages. When the master consumer disconnects, all (non-acknowledged and subsequent) messages are delivered to the next consumer in line.

For partitioned topics, broker will sort consumers by priority level and lexicographical order of consumer name. Then broker will try to evenly assigns topics to consumers with the highest priority level.

For non-partitioned topic, broker will pick consumer in the order they subscribe to the non partitioned topic.

In the diagram below, Consumer-B-0 is the master consumer while Consumer-B-1 would be the next consumer in line to receive messages if Consumer-B-0 is disconnected.

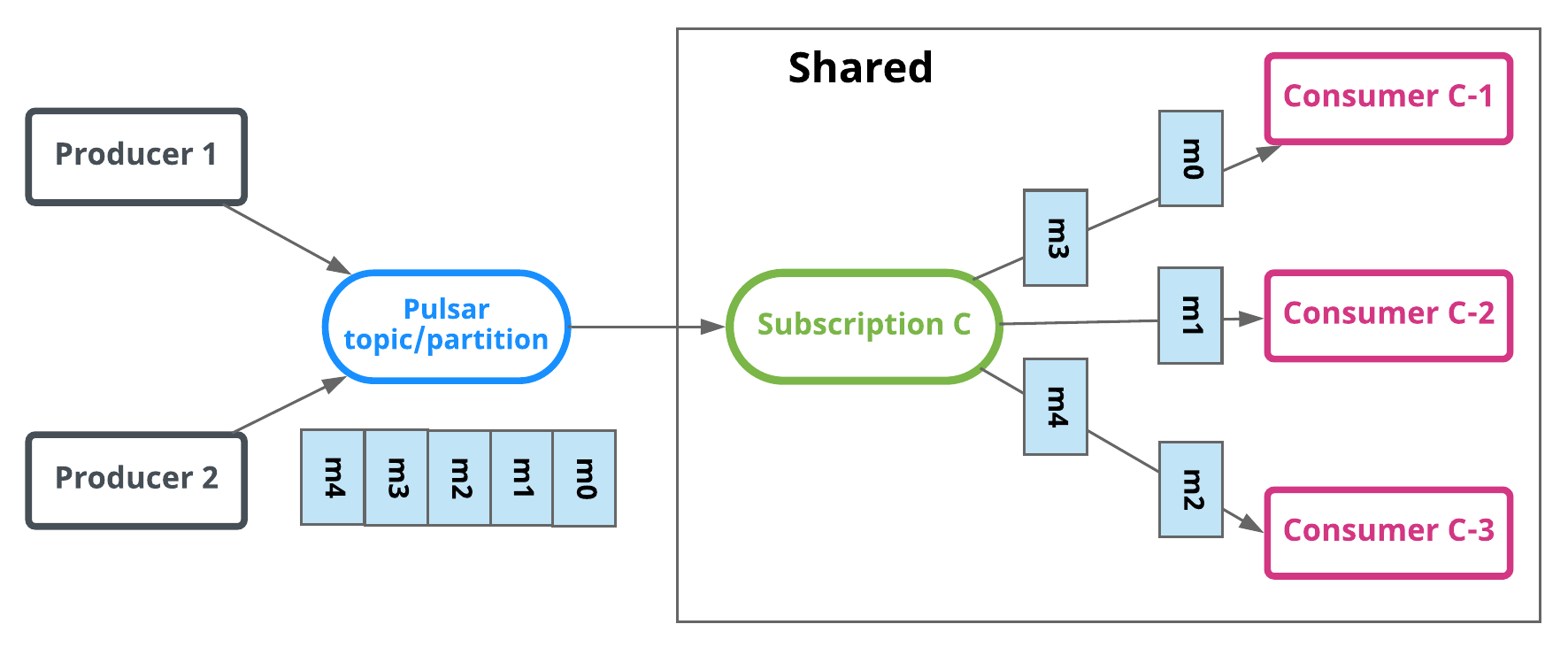

Shared

In shared or round robin mode, multiple consumers can attach to the same subscription. Messages are delivered in a round robin distribution across consumers, and any given message is delivered to only one consumer. When a consumer disconnects, all the messages that were sent to it and not acknowledged will be rescheduled for sending to the remaining consumers.

In the diagram below, Consumer-C-1 and Consumer-C-2 are able to subscribe to the topic, but Consumer-C-3 and others could as well.

When using shared mode, be aware that:

- Message ordering is not guaranteed.

- You cannot use cumulative acknowledgment with shared mode.

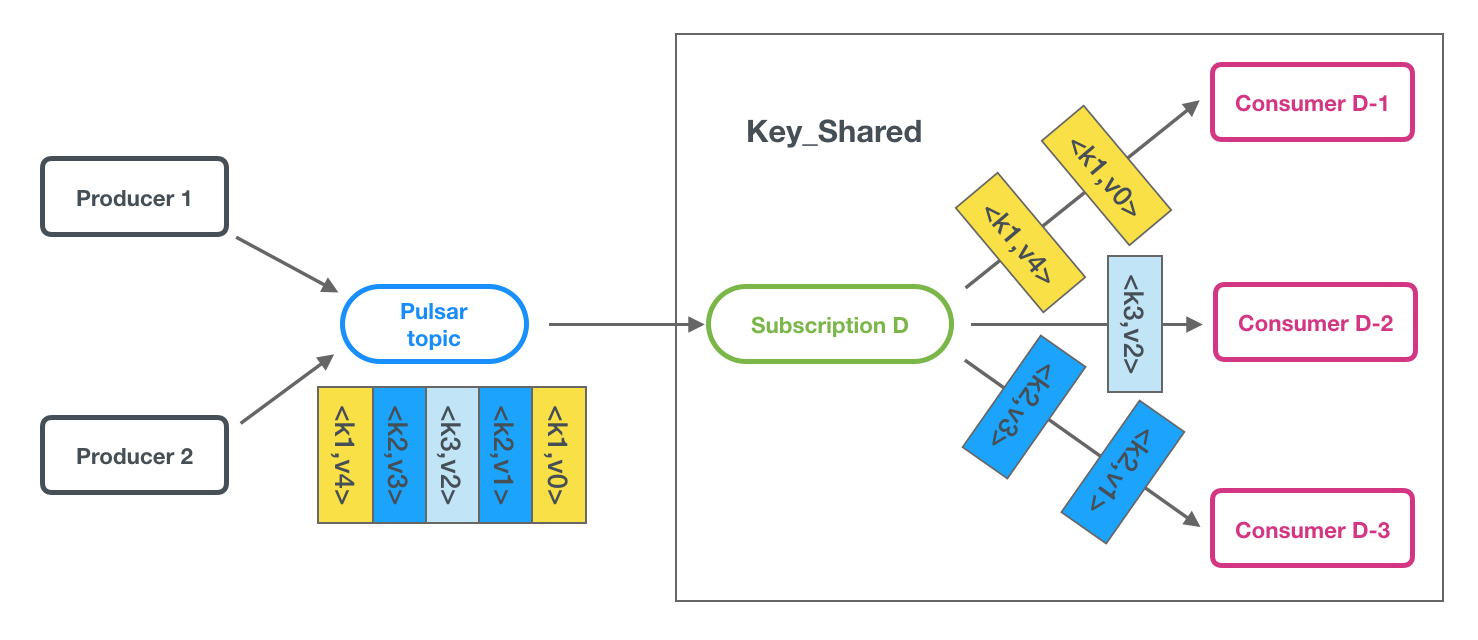

Key_Shared

In Key_Shared mode, multiple consumers can attach to the same subscription. Messages are delivered in a distribution across consumers and message with same key or same ordering key are delivered to only one consumer. No matter how many times the message is re-delivered, it is delivered to the same consumer. When a consumer connected or disconnected will cause served consumer change for some key of message.

Note that when the consumers are using the Key_Shared subscription mode, you need to disable batching or use key-based batching for the producers. There are two reasons why the key-based batching is necessary for Key_Shared subscription mode:

- The broker dispatches messages according to the keys of the messages, but the default batching approach might fail to pack the messages with the same key to the same batch.

- Since it is the consumers instead of the broker who dispatch the messages from the batches, the key of the first message in one batch is considered as the key of all messages in this batch, thereby leading to context errors.

The key-based batching aims at resolving the above-mentioned issues. This batching method ensures that the producers pack the messages with the same key to the same batch. The messages without a key are packed into one batch and this batch has no key. When the broker dispatches messages from this batch, it uses NON_KEY as the key. In addition, each consumer is associated with only one key and should receive only one message batch for the connected key. By default, you can limit batching by configuring the number of messages that producers are allowed to send.

Below are examples of enabling the key-based batching under the Key_Shared subscription mode, with client being the Pulsar client that you created.

- Java

- C++

- Python

Producer<byte[]> producer = client.newProducer()

.topic("my-topic")

.batcherBuilder(BatcherBuilder.KEY_BASED)

.create();

ProducerConfiguration producerConfig;

producerConfig.setBatchingType(ProducerConfiguration::BatchingType::KeyBasedBatching);

Producer producer;

client.createProducer("my-topic", producerConfig, producer);

producer = client.create_producer(topic='my-topic', batching_type=pulsar.BatchingType.KeyBased)

When you use Key_Shared mode, be aware that:

- You need to specify a key or orderingKey for messages.

- You cannot use cumulative acknowledgment with Key_Shared mode.

Multi-topic subscriptions

When a consumer subscribes to a Pulsar topic, by default it subscribes to one specific topic, such as persistent://public/default/my-topic. As of Pulsar version 1.23.0-incubating, however, Pulsar consumers can simultaneously subscribe to multiple topics. You can define a list of topics in two ways:

- On the basis of a regular expression (regex), for example

persistent://public/default/finance-.* - By explicitly defining a list of topics

When subscribing to multiple topics by regex, all topics must be in the same namespace.

When subscribing to multiple topics, the Pulsar client automatically makes a call to the Pulsar API to discover the topics that match the regex pattern/list, and then subscribe to all of them. If any of the topics do not exist, the consumer auto-subscribes to them once the topics are created.

No ordering guarantees across multiple topics When a producer sends messages to a single topic, all messages are guaranteed to be read from that topic in the same order. However, these guarantees do not hold across multiple topics. So when a producer sends message to multiple topics, the order in which messages are read from those topics is not guaranteed to be the same.

The following are multi-topic subscription examples for Java.

import java.util.regex.Pattern;

import org.apache.pulsar.client.api.Consumer;

import org.apache.pulsar.client.api.PulsarClient;

PulsarClient pulsarClient = // Instantiate Pulsar client object

// Subscribe to all topics in a namespace

Pattern allTopicsInNamespace = Pattern.compile("persistent://public/default/.*");

Consumer<byte[]> allTopicsConsumer = pulsarClient.newConsumer()

.topicsPattern(allTopicsInNamespace)

.subscriptionName("subscription-1")

.subscribe();

// Subscribe to a subsets of topics in a namespace, based on regex

Pattern someTopicsInNamespace = Pattern.compile("persistent://public/default/foo.*");

Consumer<byte[]> someTopicsConsumer = pulsarClient.newConsumer()

.topicsPattern(someTopicsInNamespace)

.subscriptionName("subscription-1")

.subscribe();

For code examples, see Java.

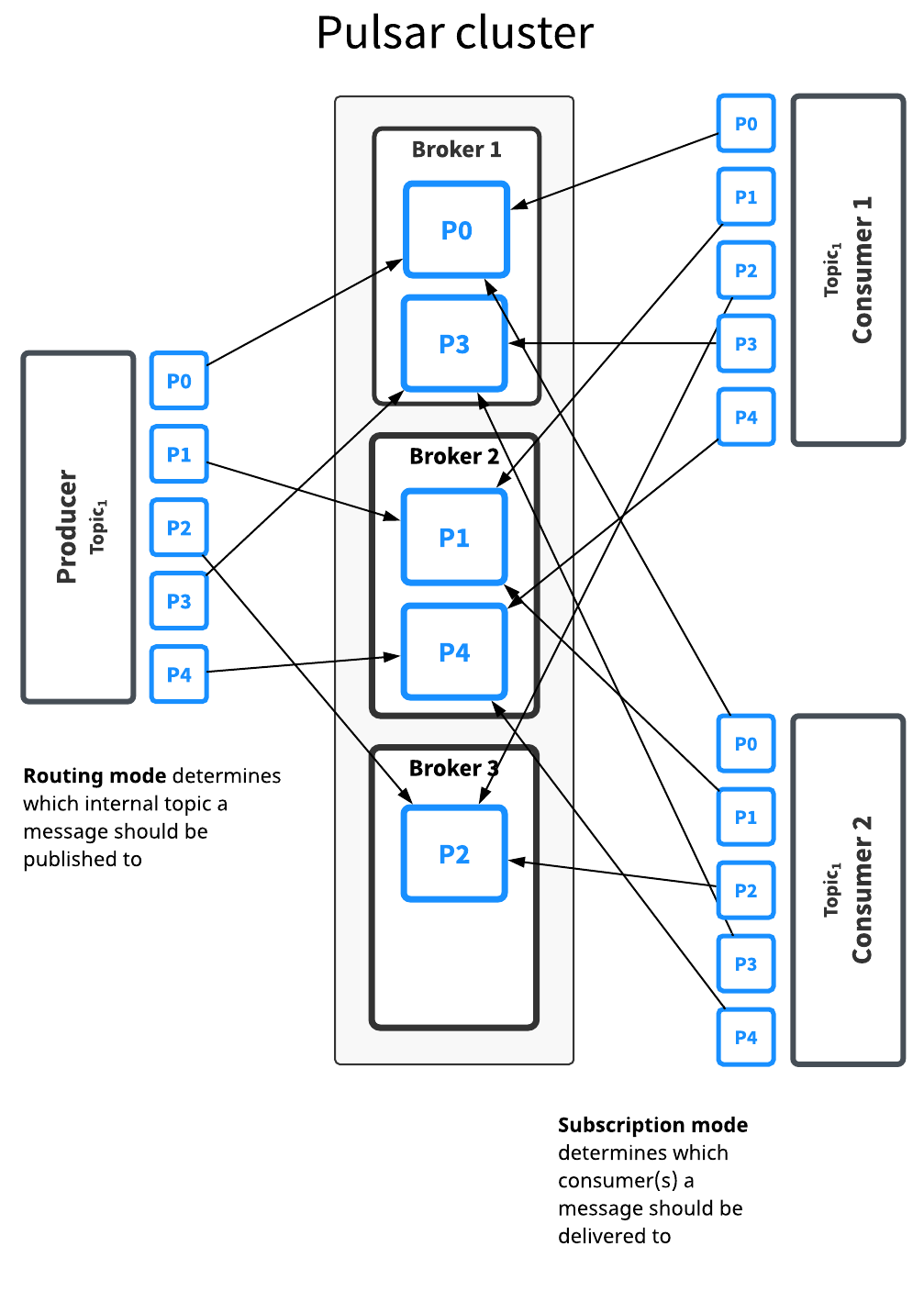

Partitioned topics

Normal topics are served only by a single broker, which limits the maximum throughput of the topic. Partitioned topics are a special type of topic that are handled by multiple brokers, thus allowing for higher throughput.

A partitioned topic is actually implemented as N internal topics, where N is the number of partitions. When publishing messages to a partitioned topic, each message is routed to one of several brokers. The distribution of partitions across brokers is handled automatically by Pulsar.

The diagram below illustrates this:

The Topic1 topic has five partitions (P0 through P4) split across three brokers. Because there are more partitions than brokers, two brokers handle two partitions a piece, while the third handles only one (again, Pulsar handles this distribution of partitions automatically).

Messages for this topic are broadcast to two consumers. The routing mode determines each message should be published to which partition, while the subscription mode determines which messages go to which consumers.

Decisions about routing and subscription modes can be made separately in most cases. In general, throughput concerns should guide partitioning/routing decisions while subscription decisions should be guided by application semantics.

There is no difference between partitioned topics and normal topics in terms of how subscription modes work, as partitioning only determines what happens between when a message is published by a producer and processed and acknowledged by a consumer.

Partitioned topics need to be explicitly created via the admin API. The number of partitions can be specified when creating the topic.

Routing modes

When publishing to partitioned topics, you must specify a routing mode. The routing mode determines which partition---that is, which internal topic---each message should be published to.

There are three MessageRoutingMode available:

| Mode | Description |

|---|---|

RoundRobinPartition | If no key is provided, the producer will publish messages across all partitions in round-robin fashion to achieve maximum throughput. Please note that round-robin is not done per individual message but rather it's set to the same boundary of batching delay, to ensure batching is effective. While if a key is specified on the message, the partitioned producer will hash the key and assign message to a particular partition. This is the default mode. |

SinglePartition | If no key is provided, the producer will randomly pick one single partition and publish all the messages into that partition. While if a key is specified on the message, the partitioned producer will hash the key and assign message to a particular partition. |

CustomPartition | Use custom message router implementation that will be called to determine the partition for a particular message. User can create a custom routing mode by using the Java client and implementing the MessageRouter interface. |

Ordering guarantee

The ordering of messages is related to MessageRoutingMode and Message Key. Usually, user would want an ordering of Per-key-partition guarantee.

If there is a key attached to message, the messages will be routed to corresponding partitions based on the hashing scheme specified by HashingScheme in ProducerBuilder, when using either SinglePartition or RoundRobinPartition mode.

| Ordering guarantee | Description | Routing Mode and Key |

|---|---|---|

| Per-key-partition | All the messages with the same key will be in order and be placed in same partition. | Use either SinglePartition or RoundRobinPartition mode, and Key is provided by each message. |

| Per-producer | All the messages from the same producer will be in order. | Use SinglePartition mode, and no Key is provided for each message. |

Hashing scheme

HashingScheme is an enum that represent sets of standard hashing functions available when choosing the partition to use for a particular message.

There are 2 types of standard hashing functions available: JavaStringHash and Murmur3_32Hash.

The default hashing function for producer is JavaStringHash.

Please pay attention that JavaStringHash is not useful when producers can be from different multiple language clients, under this use case, it is recommended to use Murmur3_32Hash.

Non-persistent topics

By default, Pulsar persistently stores all unacknowledged messages on multiple BookKeeper bookies (storage nodes). Data for messages on persistent topics can thus survive broker restarts and subscriber failover.

Pulsar also, however, supports non-persistent topics, which are topics on which messages are never persisted to disk and live only in memory. When using non-persistent delivery, killing a Pulsar broker or disconnecting a subscriber to a topic means that all in-transit messages are lost on that (non-persistent) topic, meaning that clients may see message loss.

Non-persistent topics have names of this form (note the non-persistent in the name):

non-persistent://tenant/namespace/topic

For more info on using non-persistent topics, see the Non-persistent messaging cookbook.

In non-persistent topics, brokers immediately deliver messages to all connected subscribers without persisting them in BookKeeper. If a subscriber is disconnected, the broker will not be able to deliver those in-transit messages, and subscribers will never be able to receive those messages again. Eliminating the persistent storage step makes messaging on non-persistent topics slightly faster than on persistent topics in some cases, but with the caveat that some of the core benefits of Pulsar are lost.

With non-persistent topics, message data lives only in memory. If a message broker fails or message data can otherwise not be retrieved from memory, your message data may be lost. Use non-persistent topics only if you're certain that your use case requires it and can sustain it.

By default, non-persistent topics are enabled on Pulsar brokers. You can disable them in the broker's configuration. You can manage non-persistent topics using the pulsar-admin topics command. For more information, see Pulsar admin docs.

Performance

Non-persistent messaging is usually faster than persistent messaging because brokers don't persist messages and immediately send acks back to the producer as soon as that message is delivered to connected brokers. Producers thus see comparatively low publish latency with non-persistent topic.

Client API

Producers and consumers can connect to non-persistent topics in the same way as persistent topics, with the crucial difference that the topic name must start with non-persistent. All three subscription modes---exclusive, shared, and failover---are supported for non-persistent topics.

Here's an example Java consumer for a non-persistent topic:

PulsarClient client = PulsarClient.builder()

.serviceUrl("pulsar://localhost:6650")

.build();

String npTopic = "non-persistent://public/default/my-topic";

String subscriptionName = "my-subscription-name";

Consumer<byte[]> consumer = client.newConsumer()

.topic(npTopic)

.subscriptionName(subscriptionName)

.subscribe();

Here's an example Java producer for the same non-persistent topic:

Producer<byte[]> producer = client.newProducer()

.topic(npTopic)

.create();

System topic

System topic is a predefined topic for internal use within Pulsar. It can be either persistent or non-persistent topic.

System topics serve to implement certain features and eliminate dependencies on third-party components, such as transactions, heartbeat detections, topic-level policies, and resource group services. System topics empower the implementation of these features to be simplified, dependent, and flexible. Take heartbeat detections for example, you can leverage the system topic for healthcheck to internally enable producer/reader to procude/consume messages under the heartbeat namespace, which can detect whether the current service is still alive.

There are diverse system topics depending on namespaces. The following table outlines the available system topics for each specific namespace.

| Namespace | TopicName | Domain | Count | Usage |

|---|---|---|---|---|

| pulsar/system | transaction_coordinator_assign_\${id} | Persistent | Default 16 | Transaction coordinator |

| pulsar/system | _transaction_log\${tc_id} | Persistent | Default 16 | Transaction log |

| pulsar/system | resource-usage | Non-persistent | Default 4 | Resource group service |

| host/port | heartbeat | Persistent | 1 | Heartbeat detection |

| User-defined-ns | __change_events | Persistent | Default 4 | Topic events |

| User-defined-ns | __transaction_buffer_snapshot | Persistent | One per namespace | Transaction buffer snapshots |

| User-defined-ns | \${topicName}__transaction_pending_ack | Persistent | One per every topic subscription acknowledged with transactions | Acknowledgements with transactions |

-

You cannot create any system topics.

-

By default, system topics are disabled. To enable system topics, you need to change the following configurations in the

conf/broker.conforconf/standalone.conffile.systemTopicEnabled=true

topicLevelPoliciesEnabled=true

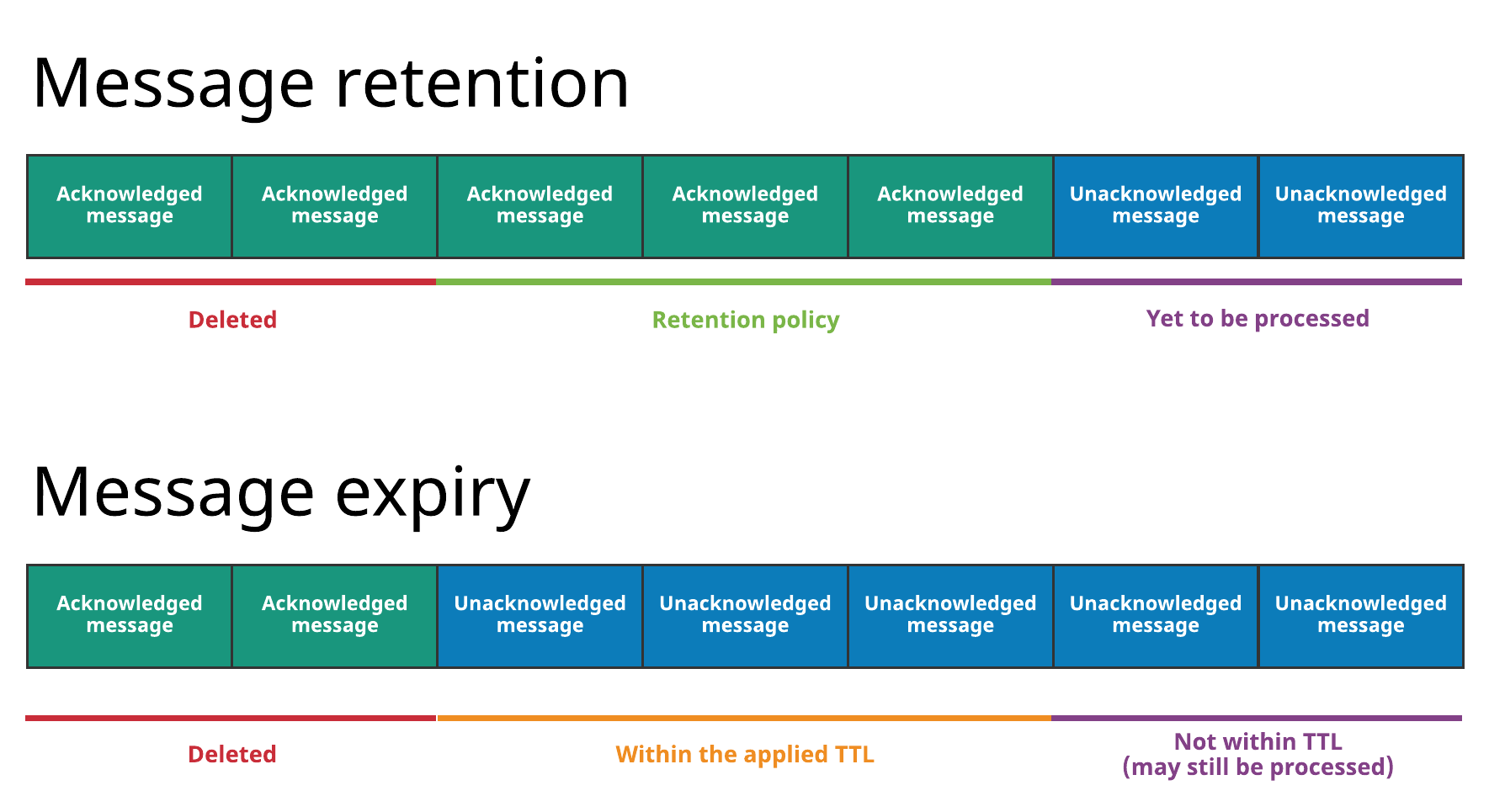

Message retention and expiry

By default, Pulsar message brokers:

- immediately delete all messages that have been acknowledged by a consumer, and

- persistently store all unacknowledged messages in a message backlog.

Pulsar has two features, however, that enable you to override this default behavior:

- Message retention enables you to store messages that have been acknowledged by a consumer

- Message expiry enables you to set a time to live (TTL) for messages that have not yet been acknowledged

All message retention and expiry is managed at the namespace level. For a how-to, see the Message retention and expiry cookbook.

The diagram below illustrates both concepts:

With message retention, shown at the top, a retention policy applied to all topics in a namespace dictates that some messages are durably stored in Pulsar even though they've already been acknowledged. Acknowledged messages that are not covered by the retention policy are deleted. Without a retention policy, all of the acknowledged messages would be deleted.

With message expiry, shown at the bottom, some messages are deleted, even though they haven't been acknowledged, because they've expired according to the TTL applied to the namespace (for example because a TTL of 5 minutes has been applied and the messages haven't been acknowledged but are 10 minutes old).

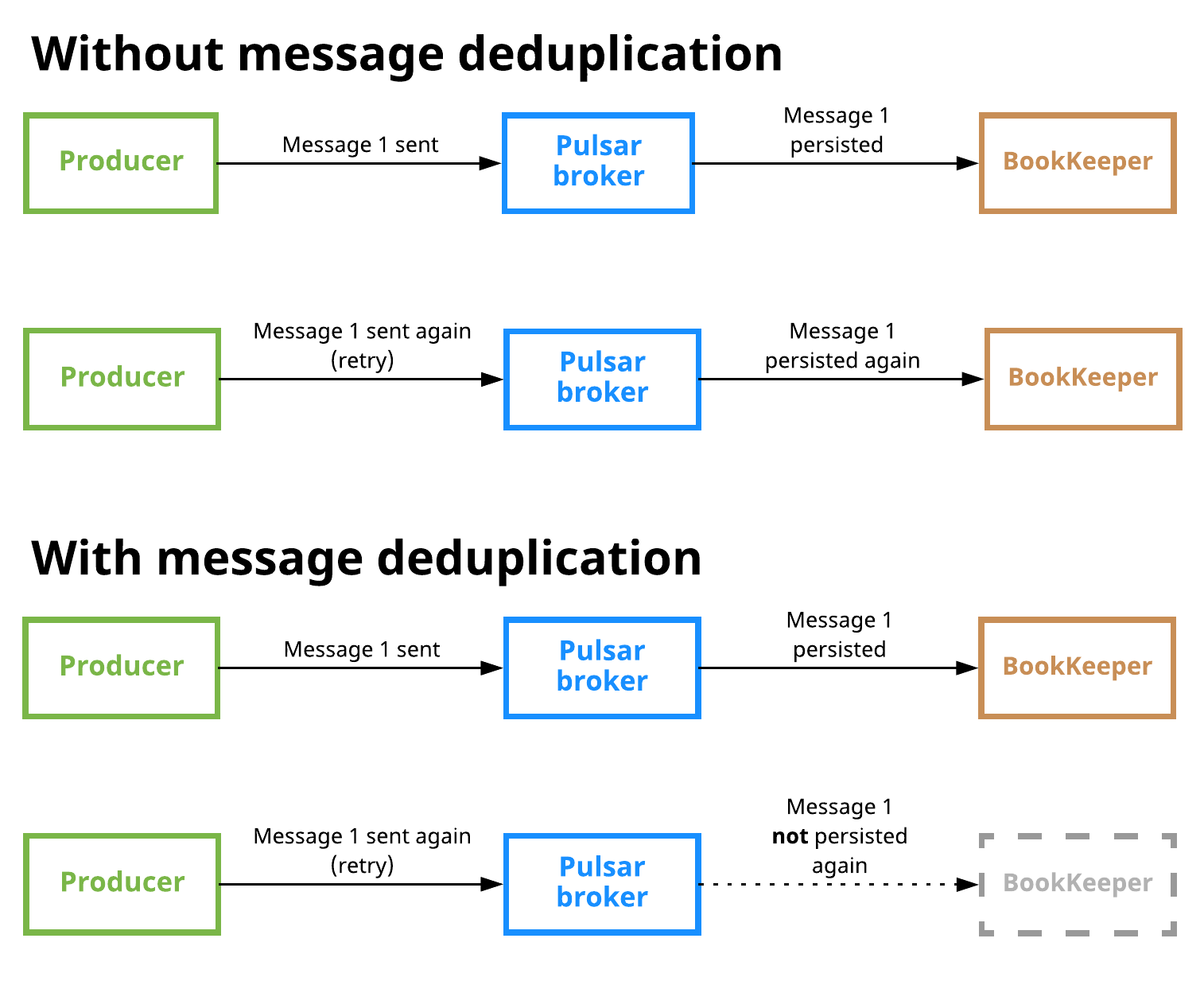

Message deduplication

Message duplication occurs when a message is persisted by Pulsar more than once. Message deduplication is an optional Pulsar feature that prevents unnecessary message duplication by processing each message only once, even if the message is received more than once.

The following diagram illustrates what happens when message deduplication is disabled vs. enabled:

Message deduplication is disabled in the scenario shown at the top. Here, a producer publishes message 1 on a topic; the message reaches a Pulsar broker and is persisted to BookKeeper. The producer then sends message 1 again (in this case due to some retry logic), and the message is received by the broker and stored in BookKeeper again, which means that duplication has occurred.

In the second scenario at the bottom, the producer publishes message 1, which is received by the broker and persisted, as in the first scenario. When the producer attempts to publish the message again, however, the broker knows that it has already seen message 1 and thus does not persist the message.

Message deduplication is handled at the namespace level or the topic level. For more instructions, see the message deduplication cookbook.

Producer idempotency

The other available approach to message deduplication is to ensure that each message is only produced once. This approach is typically called producer idempotency. The drawback of this approach is that it defers the work of message deduplication to the application. In Pulsar, this is handled at the broker level, so you do not need to modify your Pulsar client code. Instead, you only need to make administrative changes. For details, see Managing message deduplication.

Deduplication and effectively-once semantics

Message deduplication makes Pulsar an ideal messaging system to be used in conjunction with stream processing engines (SPEs) and other systems seeking to provide effectively-once processing semantics. Messaging systems that do not offer automatic message deduplication require the SPE or other system to guarantee deduplication, which means that strict message ordering comes at the cost of burdening the application with the responsibility of deduplication. With Pulsar, strict ordering guarantees come at no application-level cost.

You can find more in-depth information in this post.

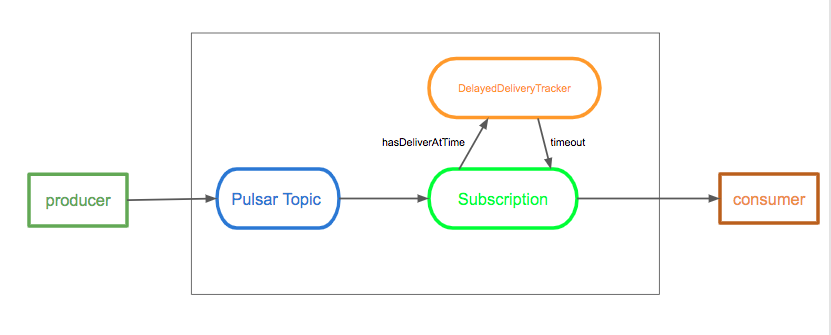

Delayed message delivery

Delayed message delivery enables you to consume a message later rather than immediately. In this mechanism, a message is stored in BookKeeper, DelayedDeliveryTracker maintains the time index(time -> messageId) in memory after published to a broker, and it is delivered to a consumer once the specific delayed time is passed.

Only shared subscriptions support delayed message delivery. In other subscriptions, delayed messages are dispatched immediately.

The diagram below illustrates the concept of delayed message delivery:

A broker saves a message without any check. When a consumer consumes a message, if the message is set to delay, then the message is added to DelayedDeliveryTracker. A subscription checks and gets timeout messages from DelayedDeliveryTracker.

Broker

Delayed message delivery is enabled by default. You can change it in the broker configuration file as below:

# Whether to enable the delayed delivery for messages.

# If disabled, messages are immediately delivered and there is no tracking overhead.

delayedDeliveryEnabled=true

# Control the ticking time for the retry of delayed message delivery,

# affecting the accuracy of the delivery time compared to the scheduled time.

# Note that this time is used to configure the HashedWheelTimer's tick time for the

# InMemoryDelayedDeliveryTrackerFactory (the default DelayedDeliverTrackerFactory).

# Default is 1 second.

delayedDeliveryTickTimeMillis=1000

# When using the InMemoryDelayedDeliveryTrackerFactory (the default DelayedDeliverTrackerFactory), whether

# the deliverAt time is strictly followed. When false (default), messages may be sent to consumers before the deliverAt

# time by as much as the tickTimeMillis. This can reduce the overhead on the broker of maintaining the delayed index

# for a potentially very short time period. When true, messages are not sent to consumers until the deliverAt time

# has passed, and they may be as late as the deliverAt time plus the tickTimeMillis for the topic plus the

# delayedDeliveryTickTimeMillis.

isDelayedDeliveryDeliverAtTimeStrict=false

Producer

The following is an example of delayed message delivery for a producer in Java:

// message to be delivered at the configured delay interval

producer.newMessage().deliverAfter(3L, TimeUnit.Minute).value("Hello Pulsar!").send();